LET’S BE FWENDS ISSUE #6:

WHEN DID ‘INTELLIGENT’ BECOME ‘SCARY’?

Maybe when the robots took over and nobody noticed?

You’re being watched

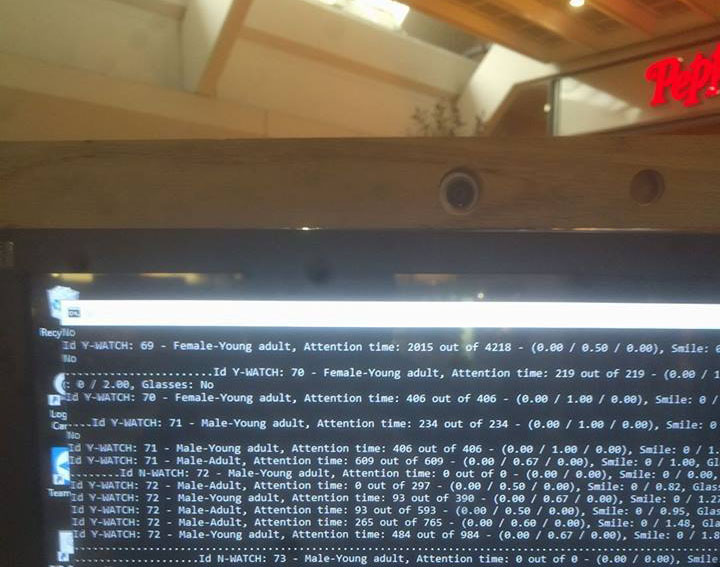

Nietzsche said_“And if thou gaze long into an abyss, the abyss will also gaze into thee.“_, but who thought that abyss would be an advertisement in a pizza place?

You’re standing in line, waiting to order your slice of pizza, while a neural network looks you in the eye, and determines your age, gender, and how your day was. All in the name of ‘customer experience’, while predicting what you’re going to do in the future.

Sounds like Minority Report? It does. But contrary to the Blockbuster Movie with Tom Cruise, it’s a reality:

(Source: https://twitter.com/GambleLee/status/862307447276544000)

The Software used is called ‘Kairos’, and if you want to see what it can do, check out their feature list.

This type of intelligent system is scary because it is not transparent. It faces ethical dilemmas because its processes are hidden. But these systems have to keep you in the dark. A system classifying you without telling you about it cannot possible have something good in store for you. That’s why they are so repulsive. They are evil, or on the verge of it.

The recent data mining scandal at Facebook, in which over 6 million teenagers in states of vulnerability (feeling “worthless,” “insecure,” “stressed,” “defeated,” “anxious,” and like a “failure.”) were presented to advertisers as a targeting group is a very good example of what can be achieved in the absence of transparency. As the article puts it:

“The big fear isn’t just what Facebook knows about its users but whether that knowledge can be weaponized in ways those users cannot see, and would never knowingly allow”

Building better systems

How can we build efficient automation systems that avoid these kinds of situations? Some say a solution would be to build ethics into these systems. I’m sceptical if this will work, mostly because we’re not very good at ethics. Take the famous trolley problem, or one of its many variations. You’d assume that we as a species would be able to solve such a problem. And especially before we consider implementing such thoughts into software.

Whatever route we take, it appears to be that transparent decision-making processes are key to non-abusive, non-objectifying automation.

Transparency in User Interfaces

But if systems are becoming more and more complex, how are we going to achieve transparency? How must the interface between us and the machines evolve? Let’s get some inspiration from how science fiction tackles this problem, but be prepared to get lost in this tumblr for quite some time:

http://sciencefictioninterfaces.tumblr.com

The friendly bot from the internet

But that doesn’t mean all machines are bad, and automation should avoided. There are some much less sinister incarnations of Djinnsthat can help you with your digital house-keeping. My friend Jon recently featured a list of bots that make the life of his team easier.

Vienna Biennale: Robots. Work. Our Future.

What does working mean in the digital age? And what is ‘living’? What role do robots have in our world, and what can be envisioned for the future? The Vienna Biennale sets out to explore the joint potential of robots and human work as an opportunity to bring about humanely motivated positive change. Go to the Vienna Biennale 2017 from 21.06. until 01.10.

And here’s the site of choice when you decide to give a fuck: http://www.giveafuck.foundation/That’s it from this edition of Let’s be Fwends. Thanks for reading, and if you can read this it means that the machines have not found you (yet). So now is a great opportunity to high-five yourself, or some-one around you. 🤖

Subscribe to Let's be Fwends